Chatbots are getting more sophisticated every day, which is great news for educators because it can help with just about anything you do.

Educators can use chatbots to:

- Write long and short-form content for your courses

- Improve your writing with technical edits and a more academic tone

- Create step-by-step instructions to complete an assignment

- Summarize and organize research and case studies in seconds

- Generate engaging responses to discussion posts

- Double-check answers to test questions

Your students can too.

We’ll dig into everything you need to prevent students from using chatbots as well as how you can incorporate it into your courses.

Bookmark this page in case you need to reference it later!

To bookmark, press Ctrl+D (Windows) or Cmd+D (Mac)

Bookmark this page for future reference!

Ctrl+D (Windows) or Cmd+D (Mac)

Part 1: Chatbots 101

What they are, how they work, and prompting tips.

Types of chatbots & how they work

Generally speaking, most chatbots fall under two broad categories:

- Rule-based chatbots

- AI chatbots

Each includes many different types, and some chatbots even have features from both. We’ll quickly cover rule-based chatbots, then focus on AI-based chatbots.

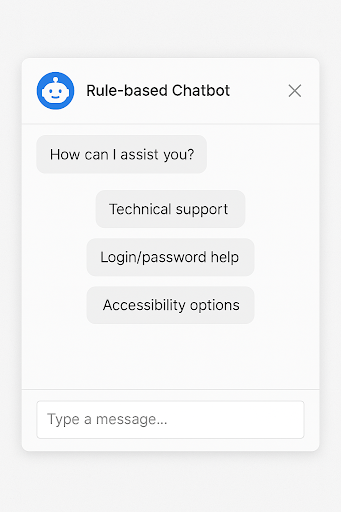

Rule-based chatbots

Rule-based chatbots follow set rules and give specific responses. They use an “if this, then that” model, which is simple, but can still handle complex interactions through a decision tree.

Some rule-based chatbots are very straightforward. For example, when you need support and the chatbot asks what it can help you with, then provides buttons for technical support, login/password issues, or accessibility options, for example. Then, based on what you click, it takes you to the next branch of the decision tree. Some of these decision-trees only have a few branches, others could have several hundred.

While some use buttons, others basically identify keywords in your message that trigger the next step in the conversation. For example, if you say, “I need to reset my password,” the chatbot recognizes words like “reset” and “password” and takes you to that branch. If it doesn’t recognize what you’re saying/asking, it uses fallback responses to reroute the discussion, such as: “Can you rephrase that?” or “Are you looking for [topic]?”

interface created by ChatGPT 4o

AI chatbots

AI chatbots are software designed—no surprises here—to chat with you. In this section, we’re focusing on generative AI chatbots like ChatGPT, Claude, and Gemini. From here on out, we’ll refer to them generally as AI chatbots, or just chatbots, unless we’re talking about other specific types.

Instead of answering from a fixed list of responses like rule-based chatbots, AI chatbots generate new, custom responses based on the insane amount of resources they’ve been trained on. Although it may seem like they’re copying from those resources, they’re basically just predicting what to say based on the patterns they’ve learned. That’s why they give you slightly different, custom responses even if you ask the same question twice.

Thanks to their underlying technologies, chatbots can understand what you’re saying, figure out what you really mean, and respond like a real person.

While AI chatbots each work a little differently, here’s a quick look at the foundational technologies they rely on:

- Large Language Models (LLMs): These are basically chatbots’ brains; they’re trained on tons of text to help chatbots understand and generate human-like responses.

- Machine Learning (ML): Allows chatbots to learn from examples and data so they can give better, more accurate responses over time.

- Natural Language Processing (NLP): Helps chatbots read and respond to your messages in a natural way.

- Natural Language Understanding (NLU): helps chatbots figure out what you really mean. This goes beyond simple word-for-word interpretations to understand intent, sentiment, and context.

- Natural Language Generation (NLG): A part of NLP that helps chatbots generate human-like responses.

PLACEHOLDER FOR GEN-AI & RAG COMPARISON

Which chatbot is best for educators?

It depends on the task. If it’s a general writing task, ChatGPT and Claude are probably your best bet. If it’s technical writing or coding, Gemini and DeepSeek Chat are good options.

ChatGPT can help you with every part of the writing process, whether it’s brainstorming lesson plans and drafting presentation content or writing objective exam rules and clear assignment instructions. It can also generate different types of text, edit and improve clarity, and adapt content to fit the needs of diverse learners.

- Best for: Creating instructional content, generating creative discussion prompts and case studies, checking grammar and punctuation, summarizing research, and adjusting text for different student levels and accessibility needs.

- Limitations: May produce generic or inaccurate content and requires fact-checking, especially for subject-specific accuracy.

Much like ChatGPT, Claude is a versatile writing tool for educators. It stands out for its ability to interpret and respond to complex prompts with greater nuance and contextual awareness while avoiding cookie-cutter language.

- Best for: Developing comprehensive course materials and in-depth resources like study guides and supplemental materials, as well as writing clear assignment instructions and providing structured feedback.

- Limitations: Can be less versatile than ChatGPT and may require more specific instructions to achieve desired outputs.

DeepSeek Chat is a newer chatbot that’s great for helping educators handle technical writing tasks. It can simplify really dense, complex information into clear, well-organized content that’s easy to understand.

- Best for: Organizing and presenting technical content for academic or administrative tasks, such as writing student performance reports, summarizing research, drafting test and assignment rules, and creating instructional guides..

- Limitations: May struggle with more creative writing tasks and can have difficulty picking up on sarcasm, figures of speech, and other language nuances.

Whether you’re asking technical questions for school/work, looking for life advice, or just yappin’ about how your day was, Pi is the perfect chatbot for it.

It’s built on its own homegrown LLM and designed to be more of a conversational partner than a “copilot” assistant. It can help you incrementally think through ideas, organize and process your thoughts and emotions, and work through tough choices with empathetic, thoughtful responses.

Another cool thing—and, surprisingly, a rare feature that the other popular chatbots don’t offer for some reason (it’s just text-to-speech)—is that Pi lets you choose from several realistic-sounding male and female AI voices with different accents to read its responses aloud.

Google’s AI assistant, Gemini, is a flexible tool for educators that can generate text, images, and code. Its capability to create different types of content is especially useful for lesson plans that include visuals, coding exercises, or interactive materials.

- Best for: Research-based writing, developing multimedia-rich instructional content, creating visual aids and coding examples, and generating structured study materials.

- Limitations: Gemini’s writing capabilities are improving, but there’s still a noticeable gap in depth and creativity compared to ChatGPT and Claude.

Microsoft Copilot is an AI assistant built into Microsoft apps (Word, Excel, etc.) and is also available as a standalone chatbot. It runs on the same large language model as ChatGPT but is geared more toward productivity and organization rather than creative writing.

- Best for: Creating course materials within Microsoft apps, summarizing research, and assisting with administrative writing tasks and organization.

- Limitations: May not be ideal for highly creative or in-depth content that requires more flexibility.

Perplexity looks and feels like a chatbot, especially now that some of them can access the internet, but teeeeechnically it’s different.

It’s an AI-powered conversational search engine and answer engine, which basically just means that it answers your questions by synthesizing and citing information from reliable sources on the internet.

NotebookLM allows you to upload PDFs, websites, YouTube videos, audio files, Google Docs, or Slides and summarize them or turn them into detailed outlines, study guides, and FAQs.

The video below gives you an idea of how it works, you can use its preset summary tools (FAQ, study guide, briefing document) and questions it generates from the text and save the responses to your notebook. You can also ask it specific questions and it’ll answer with cited information from the text.

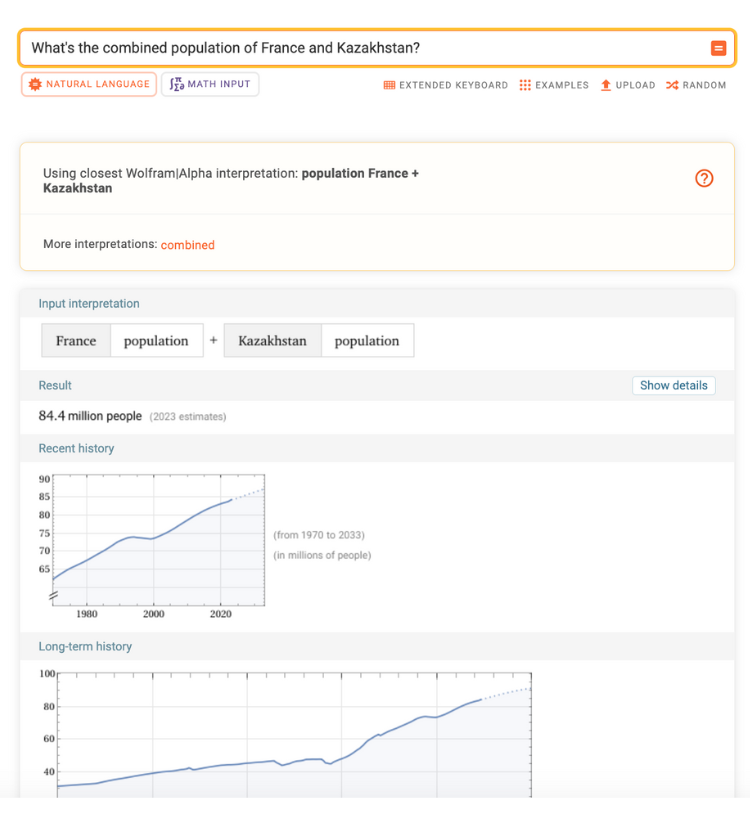

Wolfram Alpha isn’t a chatbot or a search engine, but it has elements of both. So, it’s a bit of something in between, plus a really smart calculator. Like a chatbot, it understands what you ask it, and it answers in a format similar to a search engine. But instead of chatting or showing links, it gives direct, computed answers based on built-in data and math.

Scholarcy is an AI summarizer with a few chatbot-like features. It quickly summarizes content, organizes it better than most chatbots, and creates flashcards to help you study. And, like chatbots, it lets you ask questions about the content and gives intelligent, helpful answers.

You can upload research papers, class notes, audio transcripts from online classes, etc., in these formats: PDF, Word, Powerpoint, HTML, XML, LaTeX, TXT, CSV, RIS, BIB, NBIB.

Chatbot Prompting 101

Prompt engineering is an extremely complex and delicate art form that requires advanced training and ongoing, relentless effort. Even then, only a few can hope to master AI prompting techniques… Sike.

Writing effective prompts is pretty simple. Just be clear and specific and provide context.

What's a prompt?

A prompt is the message you type into a chatbot or other generative AI to tell it what you want. Prompts can be a question, requesting help, or giving it a task like summarizing text, generating code, and even translating text to other languages.

What is prompt engineering?

Prompt engineering is just how to write your requests to get the response you’re looking for from a chatbot. “Engineering” makes it sound really technical (sometimes it can be), but for most educators’ use cases, you just need to be clear and specific about what you want and provide details to help the chatbot tailor its response.

There are a ton of terms that make prompting seem like an extremely complex and technical process, but they mostly just describe things people naturally do when they use AI.

Like shot prompting, for example. It includes Zero-shot, One-shot, and Few-shot. It just refers to the number of examples you provide in your prompt to show the chatbot what you want.

- Zero-shot = 0 examples

- One-shot = 1 example

- Few-shot = a few examples

Chatbot prompting tips for educators

Provide specific context and clear instructions

Providing specific, relevant details can improve the chances of getting the response you want.

Think of writing a prompt like writing a grocery list for someone who’s shopping for you. The chatbot will usually get the items on your list, but if you want something specific—like a brand, flavor, or amount—you have to say so.

If you want vanilla ice cream, adding “snacks” to your list doesn’t really help. Adding “ice cream” is better, but you still might get the wrong flavor.

Be specific: ½ gallon of Haagen-Dazs Vanilla Ice Cream.

Details to consider providing when writing prompts for AI chatbots:

- Voice: Who should it write like? e.g., “Write as a historian” or “Write like [specific person]

- Tone: Casual, formal, humorous, academic, dramatic?

- Audience: Who’s reading this? Students? What kind—third graders, college freshmen, PhD researchers? What’s their major? How much do they already know?

- Format: How should the response be provided to you? Paragraphs or bullet points? If you want a chart or table, be specific about the type, how many rows and columns, titles, etc.

- Length: How many words, sentences, paragraphs, bullet points, etc.?

- Context: Any key details? What information and nuances are needed?

- Examples: Provide good and bad examples so the chatbot understands what you want.

Prompt examples

Use these prompting examples and templates as a starting point, and tailor your prompt with information specific to your assignments and assessments.

Template: Based on the text I provided, create [number] [question type] questions to assess [audience] on their understanding of [topic(s)]. [Q&A requirements and specifications.]

Example: Based on the text I provided, create 30 multiple-choice questions to assess participants preparing for a Food Safety Manager Certification exam on their understanding of methods to prevent cross-contamination. Each question should be objective, concise, and written in plain language. Provide four answer choices per question, with only one correct answer.

More tips on how to write better exam questions.

Template: Based on the following [question type] questions, create a [alternative question type] question(s) that assesses the same underlying concepts or skills. The new question should encourage [objective/outcomes (e.g., critical thinking and practical application rather than recall)]. [Add questions here.]

Provide a clear explanation of your reasoning for how you adapted the original questions into this format. Then, include a concise description of what an appropriate student response should contain.

Example of the 1st paragraph from above: Based on the following multiple-choice questions, create an essay question that assesses the same underlying concepts or skills. The new essay question should encourage critical thinking and practical application rather than recall. [Add questions here.]

Note: If you’re repurposing the question for a different audience or subject, briefly describe them so the chatbot can adapt its response.

Learn more about writing better exam questions.

Writing objective exam rules is waaaay trickier than it seems. Many times, rules that seem crystal clear can still be misinterpreted by test takers. Here’s a complete guide with examples of objective exam rules, and you can give the prompt below to a chatbot.

Prompt to use: Create objective online rules for test takers. The rules should comprehensively address:

*Behavior (e.g., no talking or using cell phones to look up answers)

*System requirements (e.g., computer with a functioning webcam and microphone)

*Test environment (e.g., quiet room, clear desk/surface, no other people present)

Use direct, unambiguous language that leaves no room for misinterpretation. Provide the rules in a numbered list that can be copied and pasted into the assessment platform/LMS.

Here are two examples of basic test rules followed by improved versions. Use the improved versions as examples of the level of clarity and specificity expected as you create the set of rules:

*Rule 1 (basic): No talking during the test.

*Rule 1 (improved): No communicating with other individuals by any means, whether verbal, non-verbal, or electronic.

*Rule 2 (basic): Your desk must be clear of all items except for the device you use to take the test.

*Rule 2 (improved): The testing area and any surface your device is placed on must be clear of all items except the device used to complete the test. This includes books, papers, electronics, and other personal belongings.

Note: When you type an asterisk (*) at the start of a line, chatbots, generally speaking, get the gist that you’re trying to create a bullet point.

Template: Based on the project instructions I provided below, create a rubric that evaluates [add what’s being assessed, e.g., specific skills and competencies]. Each item should include [type of performance scale, e.g., a five-point numeric scale from 1 to 5 (1 = Novice, 5 = Excellent) or a three-point scale with the levels: Excellent, Developing, and Needs Improvement].

Format the rubric in a [type, e.g., table or chart] to use in [format, e.g., Word Doc, Google Sheets, LMS, etc.]. Here are the assignment details and instructions: [add information]

Example: Based on the project instructions I provided below, create a rubric that evaluates practical application skills. Each item should include five performance levels using a numeric scale from 1 to 5 (1 = Novice, 5 = Excellent).

Format the rubric in a table to use in a Word Doc and include a column for written feedback. Here are the assignment details and instructions: [add information]

Note: This prompt template is just that: a template—a starting point. You’ll really need to fill in the blanks with as much information and context as possible—learning goals, topics/subjects, specific characters, realistic issues and scenarios—to help the chatbot tailor the case study to your course needs.

Create a [length]-word case study about [specific scenario/situation] for [audience, e.g., undergraduate business students, professionals in a certification program, etc.].

The case study should include:

*Comprehensive, realistic background information about [scenario/situation]

*Key characters, stakeholders, and/or groups involved

*Specific opportunities, challenges, and decision points for the learner to evaluate

*Five short-answer questions that require in-depth problem-solving and a proposed plan to address the situation

Write an engaging two-part discussion prompt for [audience, e.g., undergraduate business majors, adult learners in professional development programs, etc.] focused on [topic/subject/situation].

Part 1 (initial response): Prompt learners to connect their real-world experiences and/or prior knowledge to [topic/subject/situation] by sharing specific examples or situations that highlight how the topic applies to their personal, academic, or professional life.

Part 2 (respond to peers): Ask learners to review and analyze at least two of their peers’ posts and respond with [e.g., thoughtful questions, alternative viewpoints, etc.] to extend the discussion.

Prompts that summarize text—whether it’s an entire research paper or a paragraph from an article—can be as simple as, “Summarize this.”

But you can also ask the chatbot to summarize it in specific formats or focus on certain topics. Just copy and paste the text into the chatbot or upload the document, then start the prompt with something like “summarize” or “simplify” (or any other related terms), and then include details on the length, format, and focus.

Here are a few example prompts you can try:

- Summarize this research study into 2-3 concise sentences, then provide bullet points on the important information in each section, especially the sections about data collection methods and results.

- Simplify this text and explain it in practical, easy-to-understand language.

- Explain what this text is saying in simple terms that [audience] can understand.

- Shorten this text into a [resource type, e.g., study guide, FAQ, etc.] for [audience].

I’m a [job role] using this image in my [online course module, presentation, article, etc.] about [subject/topic]. Please write the following for the image:

- Descriptive alt text (approximately 125 characters or fewer)

- Detailed image description for accessibility

- Suggested file name (use lowercase letters and hyphens, no spaces)

- Text caption to display alongside the image

Note: You can upload images to most AI chatbots like as ChatGPT, Gemini, and Claude.

Don't overthink it

- Don’t worry about order: Chatbots use machine learning to process your whole prompt at once, so the order of the information you provide usually doesn’t matter that much in most cases. Just focus on providing the right information.

- Write naturally: Chatbots can “understand” everyday language (even our slang and sarcasm) thanks to a technology called natural language processing, which is part of how large language models (LLMs) work. So, you don’t need to use special wording or commands. Just write to it like you would a real person, and it’ll usually get the gist of what you’re saying.

Fact-check everything

Always, always, always fact-check chatbot responses because they don’t generate text based on facts. They just predict what word is most likely to come next.

Treat all chatbot conversations like public conversations

Regardless if it’s a public or private chatbot, don’t include any personally identifiable information (PII) or sensitive organizational details in your prompts or files you upload (Excel, Word, etc.).

Anonymize information to protect learners, yourself, and your organization. For example, use “University/Company ABC” instead of your company name, or “Student/Employee A” instead of specific names.

Part 2: Pros & Cons of Chatbots in Higher Education

The good, the bad, and the ugly behind chatbot use in higher ed.

Pros of AI chatbots in higher education

When used appropriately, chatbots can benefit educators and students. We focus on student benefits here, but part 3 covers educators’ benefits and uses.

Personalizes learning experiences

Whether chatbots are used independently or integrated with other learning tools, their ability to personalize learning experiences is a key benefit that overlaps with nearly every benefit we cover in this section.

Increases engagement

AI chatbots’ ability to provide students with immediate quality feedback helps create a more engaging and interactive learning experience (Bhutoria, 2022). By adjusting their feedback to match each student’s needs and preferences, chatbots help keep students engaged and make it easier for them to remember what they’ve learned (Labadze et al., 2023).

This personalized, interactive learning doesn’t just increase engagement, it actually makes learning more enjoyable for students (Song & Song, 2023; Walter, 2024). And in some cases, chatbots can increase students’ motivation, interest in the learning materials, and participation in the course (Mai et al., 2024; Nguyen, 2023), which can encourage them to seek out more information to learn in-depth (Wood & Moss, 2024).

Although it’s worth noting that chatbots tend to have a greater impact on engagement and motivation when students don’t know the material as well (Liu & Reinders, 2025). This is likely because students who are knowledgeable and interested in the subject simply don’t need chatbots to stay motivated and engaged.

Improves learning and academic performance

AI chatbots can significantly improve learning and academic performance in higher education, but the benefits depend on how students use chatbots and how often. (Liu et al., 2022; Sanchez-Vera, 2025; Schei 2024; Stojanov et al., 2024; Wu & Yu, 2023).

For example, Sanchez-Vera (2025) found that students who use chatbots moderately with their regular studying method were better prepared for exams and outperformed students who used them too little or too much. In other words, chatbots can help students learn, but there’s a point of diminishing returns when they’re overused. The sweet spot is when students use them as a supplement to regular studying.

Stojanov et al. (2024) found that students’ views of their academic performance can influence the ways they use chatbots and how often they rely on them:

“High-achievers” don’t use chatbots that often, but when they do, it’s usually to reinforce what they learned or to learn more about a topic.

“Average” students use chatbots more than high-achievers, typically to help them complete specific parts of their assignments.

“Struggling” students rely on chatbots the most and usually use them to clarify confusing topics and simplify content to make it easier to understand.

Most students use chatbots for basic things like defining terms and comparing concepts, but some rely on them to understand how those concepts apply to real-world situations (Sanchez-Vera, 2025). This aligns with findings from Zhong et al. (2024), where students who used ChatGPT had a better understanding of key terms and concepts related to specific subjects/fields.

While AI chatbots seem to be the most beneficial for university students (Wu & Yu, 2023), a common challenge for students at all levels (higher ed, primary, and secondary) was that they didn’t fully understand what chatbots could do or know how to ask effective questions, which limited their ability to make the most of the tool (Sanchez-Vera, 2025; Wu & Yu, 2023).

Provides real-time, quality feedback and support

Nearly every study we use in this guide emphasizes how chatbots’ personalized, real-time, quality feedback can help students learn, reflect, and stay engaged. They can tailor responses to each student’s needs and help with many different types of learning activities, including improving their writing, learning through debates, and setting goals while tracking progress during study sessions. Chatbots can also identify where students are struggling and respond with diverse, personalized support, such as follow-up questions or quizzes, which provide an interactive and low-pressure way to reinforce learning (Deng & Yu, 2023).

While some students find chatbot feedback easier to read and more organized than instructors’ feedback, it’s not a good idea to rely on it for grading since it usually lacks nuance and doesn’t always match the feedback instructors would give(Dai et al., 2023; Neuman, 2021; Schei et al., 2024). For example, chatbots’ responses can be too long, not personalized, or overly positive in some cases.

Overall, though, chatbots are effective tools that students can use to get immediate feedback than can help them learn.

Acts as a tutor and learning partner

The feedback from chatbots naturally extends to their ability to be great tutors and study partners for students. They can simplify complex information, organize tons of text, and turn different course content into study guides and quizzes. They also create a more relaxed, nonjudgemental environment where students feel comfortable asking questions (Klos et al., 2021).

Encourages independent learning

Chatbots help students learn independently, take ownership of their progress, and play a more active role in directing their own learning (Creely, 2024; Sánchez-Vera, 2025).

Because chatbot responses adapt to individual needs, they offer a personalized, flexible experience that supports self-paced learning (Creely, 2024; Deng & Yu, 2023).

They also support independent learning in several ways by offering self-assessment, self-monitoring, goal setting, and progress tracking. For example, chatbots provide quizzes or engage in debates and discussions to help students test their knowledge and reflect on their understanding (Chang et al., 2023; Deng & Yu, 2023) and to plan study sessions by setting specific objectives and checking in throughout to stay on track (Creely, 2024; Pellas, 2025).

Reduces cognitive load

When students’ workload is high, they’re likely to turn to chatbots and any tool they can find to help manage it all (Hasebrook et al., 2023; Koudela-Hamila et al., 2022). Chatbots can help reduce cognitive load by handling smaller tasks and simplifying complex information (Imundo et al., 2024; Pellas, 2025; Pergantis et al., 2025).

For example, students can reduce cognitive load and cut down on busy work by prompting chatbots to:

- Summarize course materials into their preferred format

- Explain complex information in simple terms

- Create lists of priority information to focus on

- Edit their work for technical corrections

- Fix formatting of references and citations

Helps manage stress and anxiety

Beyond lightening students’ mental workload, chatbots can help reduce stress and anxiety, whether they’re stressed out the night before a big exam and need some words of encouragement or help with a specific topic, or even when they’re just feeling anxious about life, they can get the support they need at any time (Klos et al., 2021; Liu et al., 2022).

A study by Wu & Yu (2024) found that chatbots also create a relaxed environment for students that allows them to take a step back when they need a break or to process their emotions. And students are pretty comfortable with opening up to chatbots because it feels like the conversations are more private and nonjudgmental (Klos et al., 2021). Some chatbots can also recognize signs of stress during conversations with students, which allows them to adjust and offer support, whether it’s simple words of encouragement or walking them through calming strategies to help them manage their emotions (Liu et al., 2022).

Pi AI is a great chatbot for students to vent to. It even has some pre-built conversation tracks, like “Let it rip” that encourages you to vent and provides extra resources to help learn about better regulating your emotions.

Improve accessibility

Chatbots can make learning more accessible for all students, especially those with disabilities or learning a language. They can help with things like turning speech into text, translating content, or simplifying information and presenting it in specific ways, like for students with dyslexia.

Not only can this support learning, but it can also build confidence, like when chatbots provide side-by-side translations to strengthen vocabulary and reading skills (Evmenova et al., 2024).

Cons of AI chatbots in higher education

Overreliance

While many students are initially interested in using chatbots, the novelty can wear out pretty quickly, especially when they’re surface-level interactions (Deng & Yu, 2023; Wu & Yu, 2024). These surface-level interactions where the responses seem vague or repetitive frustrate students and can cause them to disengage.

At this point, students may not use chatbots to learn more, but they could still rely on them as a shortcut to do their work for them rather than working through problems or thinking critically about the material (Aad & Hardey, 2025; Abbas et al., 2024).

Chatbots can also take the place of meaningful conversations with instructors and classmates, which are often what keep students interested and involved in their learning over time (Aad & Hardey, 2025). Without that human connection, students may begin to disengage and, again, only rely on the chatbot for answers (Abbas et al., 2024).

Inaccurate information

Although AI companies are incrementally reducing how often their models “hallucinate” it still happens pretty often. Hallucinations refer to when AI models make things up and generate incorrect, misleading, or nonsensical information (Susnjak & McIntosh, 2024). These responses can seem pretty convincing because of how confidently chatbots generate them.

But that’s not surprising for two reasons:

1. They generate what sounds right, not what is right

Chatbots generate responses by predicting what words or phrases are most likely to come next. It’s basically like a really, really, REALLY advanced version of the predictive text your phone uses to guess what you’ll type next when you’re texting someone. So, even if it’s wrong, chatbots may still generate it because it sounds like the best fit to come next.

2. They’re only as accurate as what they’re trained on

Chatbots can’t fact-check like humans can (or should), and they’re only as accurate and reliable as the resources they’ve been trained on. They don’t have a built-in understanding of what’s true or false or a way to objectively verify anything. Their only method of “fact-checking” is to refer back to what they were trained on.

For the sake of a simple example, imagine they were trained only on factually incorrect articles. Those articles, errors and all, become the AI’s source of truth, which is part of why they can confidently generate inaccurate information (Susnjak & McIntosh, 2024).

Academic dishonesty

The numbers vary depending on the study, but it’s fair to say that at least 80-90% of college students cheat at some point. And now, recent student surveys indicate that just about the same percentage use chatbots.

Survey Author (Month/Year) | # of students surveyed | % of students who use chatbots |

|---|---|---|

Deschenes & McMahon (Jun, 2024) | 360 | 59-65% |

Gruenhagen et al., (Jun, 2024) | 337 | 80% |

Digital Education Council (Jul, 2024) | 3,839 | 86% |

Higher Education Policy Institute (Feb, 2025) | 1,041 | 88% |

Of the 1,041 students who completed the HEPI survey, 88% said they use AI on their assessments. Gruenhagen et al. (2024)’s survey also found that many students use chatbots to “help” with assessments, such as looking up information or getting example responses. Making it even worse, many didn’t see it as cheating.

No matter how you look at it, the math doesn’t favor academic integrity.

Biases

As we mentioned earlier, chatbots are only as good as the resources they’re trained on. If the resources are biased, the chatbot’s responses may be biased. But how the systems are designed and the people who make them also play a role in this bias (Dwivedi et al., 2023; Wach et al, 2023).

The biases might be subtle and unintentional, but they can still show up in how topics are covered, what information is prioritized, and the perspectives that are emphasized (Chapelle et al., 2024; Shankar et al., 2025)

Moral of the story: People are biased and so is everything they make.

Privacy risks

Chatbots collect anything students, faculty, and staff put into them, which obviously creates privacy risks. For example, if a faculty member uploaded a document that included students’ personally identifiable information. It’s not always clear how that data is used or stored either. That information could be used to train future AI models or even misused and exposed in some situations.

Part 3: Using chatbots in your courses

Simple ways for faculty to save time and improve processes.

Main Menu

Chatbots can be a valuable support tool for instructors that can save time and help with course design, developing content for assignments and assessments, and even with adapting course materials into varying formats for students with disabilities.

Assisting with basic areas of course design

AI can support instructors throughout every phase of course design, from shaping learning objectives to refining materials based on student feedback. Here are a few ways chatbots can help with each stage of the course design process:

- Analyze and Design: Brainstorming and improving learning objectives that align with course goals.

- Develop and Implement: Developing and repurposing assessments, assignments, and other course materials and activities. Chatbots can also help adapt content for diverse learners, such as:

- Writing alt text for images and video

- Adapting text for students with specific disabilities (e.g., simplifying, chunking, and organizing assignments for students with dyslexia)

- Evaluate and Review: Analyzing student performance data or course feedback to improve future courses.

Grading written assignments?

You can use chatbots to review written assignments and grade them based on your rubric. But just because you can doesn’t mean you should. As mentioned earlier, chatbot feedback usually isn’t as detailed or nuanced as what faculty provide, and it often doesn’t always align with the instructors’ (Dai et al., 2023).

That said, you can use chatbots as a tool to summarize and outline an essay before you read it as a way to get a quick overview of what’s discussed or identify areas to focus on. However, some educators have fair ethical concerns about using public or unsecured chatbots, since submitting student work may share it with the company and contribute to future model training.

Refine your test rules, instructions, and guidelines

Writing comprehensive, objective, and clear test rules is waaaay tricker than it seems. Even rules that seem straightforward can still be misinterpreted (or exploited) by students because of subtle word choices. Here’s a complete guide with examples of objective exam rules, and you can give the prompt below to a chatbot.

Example prompt

Prompt to use: Create objective online rules for test takers. The rules should comprehensively address:

*Behavior (e.g., no talking or using cell phones to look up answers)

*System requirements (e.g., computer with a functioning webcam and microphone)

*Test environment (e.g., quiet room, clear desk/surface, no other people present)

Use direct, unambiguous language that leaves no room for misinterpretation. Provide the rules in a numbered list that can be copied and pasted into the assessment platform/LMS.

Here are two examples of basic test rules followed by improved versions. Use the improved versions as examples of the level of clarity and specificity expected as you create the set of rules:

*Rule 1 (basic): No talking during the test.

*Rule 1 (improved): No communicating with other individuals by any means, whether verbal, non-verbal, or electronic.

*Rule 2 (basic): Your desk must be clear of all items except for the device you use to take the test.

*Rule 2 (improved): The testing area and any surface your device is placed on must be clear of all items except the device used to complete the test. This includes books, papers, electronics, and other personal belongings.

Note: When you type an asterisk (*) at the start of a line, chatbots, generally speaking, get the gist that you’re trying to create a bullet point.

Translating text

Most chatbots are pretty good at translating text into other languages. But sometimes the translations can feel a bit off. Even if the meaning is right, the phrasing might come across as too literal or awkward.

The prompt can be as simple as this: Translate this to [language]: [add your text]

Localizing text

Chatbots can localize text for you. For example, an American instructor teaching a class of UK students can use a chatbot to swap terms like “proctor” to “invigilator” and adjust spelling from “analyze” to “analyse.”

Summarizing audio transcripts

While some video conferencing tools provide audio transcripts, they aren’t always organized and easy to follow. Faculty can upload the document or paste the text into the chatbot and provide a prompt to format it how you’d like (e.g., paragraph summaries or bullet points with timestamps), organize it into topics and sections, or repurpose it into entire new resources such as a discussion prompt or study guide.

Accessibility

Chatbots can make learning more inclusive and accessible by:

- Translating text in real-time

- Adapting, simplifying, and organizing content for students with learning or cognitive disabilities

- Tailoring responses to students’ learning styles and preferences

- Generating alternative text for multimedia content

- Adjusting responses based on students’ emotions (e.g., offering words of encouragement when a student is frustrated or discouraged)

While they can help improve accessibility, chatbots also need to be accessible themselves by working with screen readers, supporting assistive technologies, and offering voice dictation.

Part 4: Preventing Cheating

Assessment strategies and technology to protect academic integrity.

Main Menu

To control and prevent chatbot cheating, you’ll need to use technology and adapt your assessments and assignments. You can use one or the other, but it won’t be as effective and meaningful. Why? Because you can adapt your assessments and assignments all you want, but without the right technology, cheating will still happen. Even worse, you might know it’s happening but have no way to prove it.

Older-ish versions of ChatGPT passed these exams, which raises a pretty fair question: If ChatGPT can handle exams like these, are yours out of its reach?

AI detection

Detecting AI-generated content is difficult for educators and AI detection tools, but in different ways. Educators are usually less accurate than AI detection tools and often struggle to tell whether the text was written by a student, generated by AI, or altered using other AI tools (Kofinas et al., 2025).

AI detection tools are usually accurate when the text is entirely AI-generated, but obviously, their accuracy drops when students alter the content themselves or run it through AI paraphrasing tools (Liu et al., 2024; Weber-Wulff et al., 2023).

Online proctoring

Exams, written assignments, math tests, virtual presentations/demonstrations, software-based projects, open-book exams, and just about any other course activities you can think of have at least one thing in common: they can be remotely proctored.

Honorlock’s hybrid proctoring solution combines AI with live virtual proctors, which means that whether students are writing an essay, virtually presenting a mock business plan, or creating a balance sheet in Excel, they won’t be able to do the following (unless the instructor allows it):

- Use chatbots or software and access websites

- Refer to books or notes unless approved by the instructor

- Paste pre-copied content (e.g., text or formulas)

- Search for information on their cell phones

- Ask voice assistants like Siri or Alexa for answers

As mentioned earlier, students won’t be able to do any of those things unless the instructor allows it. With Honorlock, faculty can easily adjust settings to let students access certain websites (e.g., specific research articles or case studies), use specific software for a task or project, or use physical resources (e.g., books or pen and paper).

Realistic, scaffolded assessments and assignments

Now that chatbots can accurately answer most test questions and write reasonably well on nearly any topic, it’s time to shift away from traditional assessments and written tasks. Educators should focus on developing scaffolded assignments and assessments that challenge students to show what they’ve learned and apply it in realistic situations, often referred to as experiential learning or authentic tasks, using diverse formats that go beyond writing and picking the right answers.

"Authentic" needs context

What makes an assignment or assessment feel authentic depends on the course and the kinds of work students may do in the future. For example, writing book reviews may be authentic in English classes, but in a nursing course, creating a patient care plan would be a more relevant, authentic activity.

Not every course will connect directly to a student’s future job, especially general education or electives, which can make cheating more likely. But as you’ll see in the examples below, scaffolded, realistic assignments can still be flexible enough to let students connect the work to their own interests while showing what they’ve learned.

So, this won’t always work everywhere or for everyone, but the general approach, or even just parts of it, can be adapted to some extent.

A few tips from Chapman et al. (2024) for educators to consider when creating authentic assessments and assignments:

- Incorporate recent, relevant real-world examples: Find ways to incorporate content and tasks that are timely and specific to the student (e.g., local news/issues, job/field, etc.). Although some chatbots have access to the internet, it can still be difficult for AI tools to respond appropriately because these tasks require context and personalized responses.

- Use genuinely authentic tasks/activities: Your assignments and assessments should reflect realistic situations students will commonly face at work. As we mentioned earlier, “authentic” depends on the course, but it can involve things like students using industry software/tools, working in groups, or running virtual simulations, for example. These help students build real-world professional skills, give instructors a clearer picture of what students know and can do, and make it harder for students to rely on AI.

- Include self/peer assessments: Having students assess their own work and give feedback to peers helps them reflect on what they’ve learned and consider different perspectives while building accountability and collaboration skills.

- Make connections to specific course content: Require students to reference specific course activities and/or materials. For example, ask students to connect a concept/discussion from a recent lecture to a case study discussed in class.

Two example assignments

Mock business assignment

Assignment 1: Create a start-up business

Outline the following:

- What products/services do you sell? What is the pricing model?

- What is your motivation for choosing to start this business? Personal (i.e., it’s a passion of yours, financial (i.e., there’s a definite need and will be profitable), both (i.e., it’s a passion of yours and will surely be profitable); not currently provided (i.e., it’s a product/service that the world needs but doesn’t have etc.); social (it’s a good/service that’s purely for the greater good of society, etc.)

- How long would it take to start your company …“opening your doors”

- Where you sell them (e.g., online, in-store, both)

- What are your expenses and overhead costs?

- Provide a brief list of your competitors and their offerings.

- What economic/environmental/political/world events impact your business? (e.g., weather & seasons; location; etc.)

Assignment 2: Finding your business on the internet

Write a brief summary (75 words or less) of internet search terms, then list common keyword search terms your customers use to find information about your business and the relevant/related industry/market products and services.

Use [add a few options of tools] to conduct keyword research. Create a list of the keywords and their approximate monthly search volume in Excel or Google Sheets. Column A = Search term. Column B = Approx. monthly search volume

Assignment 3: Brand, marketing, and advertising

Consider your motivation for starting this business and create:

- Your company mission statement (1-4 sentences)

- Brand statement/tagline (1 sentence)

- Advertisements for your business (2 options):

- 15-30 second video commercial for your business

- 5 ads (designed images)

Explain where you would advertise your business (internet search, social media, TV, print, radio, etc.) and why you chose that method. Be specific about the platforms, search engines, or other outlets that you would use, and support your choices with reasoning related to your target audience.

Assignment 4: Visual SWOT analysis

Consider [specific parts of earlier assignment tasks] and develop a chart/visual SWOT analysis. After completing the visual, identify the two most significant strengths and the two most pressing weaknesses that will directly impact your business’ ability to remain profitable. Explain why you consider each to be the most critical and how it directly affects your ability to generate revenue, and maintain a competitive advantage to remain profitable.

Assignment 5: Competitors devil’s advocate

Considering the SWOT analysis, how would you beat your business if you were your competitors?

Assignment 6: Market/industry prediction

Create a timeline (with a 500-word write-up) of the ways the market/industry will shift/evolve within the next 5 years. What will cause those changes? What do companies need to do to survive? [Add other relevant questions]

Cite at least 3 expert sources (e.g., peer-reviewed journals, industry reports, articles/books by recognized authorities) as part of your rationale.

Assignment 7: Company evolution based on predictions

Create a 5-10 minute explainer video (voiceover with on-screen visual) describing your market/industry timeline, then explain why/how your company will survive market/industry changes in the future.

Mock science assignment

Assignment 1: Identify the environmental challenge

Identify a specific environmental challenge within your local community or region (e.g., water pollution in a local river, invasive species in a park, air quality issues in a specific neighborhood, loss of biodiversity in a local ecosystem).

Describe the following in approx. 500 words or less:

- Provide a detailed description of the challenge and its severity and potential consequences.

- Explain why you chose this challenge and how it impacts you and your community.

Assignment 2: Background research

Review existing research related to your chosen environmental challenge

- Scientific studies, reports, and data from credible sources (e.g., peer-reviewed journals, government agencies, reputable environmental organizations).

- Summarize the findings from five of those sources (250 words or less for each source).

Identify local coverage

- Find any public-facing information regarding the challenge in your community (e.g., local government press releases; local news/journalist coverage (articles, videos, etc.); social media posts (photos/videos about the challenge), etc. Provide links (or files) to information you find.

- If you cannot locate any public facing information, create a brief (350 words or less) newspaper opinion-piece acting as an investigative reporter and provide photo/video examples

- You can take photos/videos of the challenge in your area or find photos/videos from other locations that face similar challenges.

- e.g., if your environmental challenge is stream/river pollution, provide photos/videos you took or find photos/videos from other areas with stream/river pollution (include links to your reference photos).

- You can take photos/videos of the challenge in your area or find photos/videos from other locations that face similar challenges.

Assignment 3: Elevator pitch about the challenge

Imagine you step onto an elevator and it’s just you and a local government official who can help take action to address the environmental issue. You have about 30 seconds for an elevator pitch educating the official about the environmental challenge and its negative impact on your community. Ideally, the pitch should motivate the official to take action.

Deliverables:

- 30-40 second video recording of your elevator pitch

- 250-500 word written explanation of how your elevator pitch educates the government official and potentially motivates them to take action.

- Specifically address how you connected your pitch to the official’s responsibilities and priorities, such as policy implications, economic impact, community well-being, resource management, and legal/regulatory concerns. Provide specific examples from your pitch to illustrate your points.

Assignment 5: Develop a proposed Solution

Based on your research and data analysis, develop a proposed solution to address the environmental challenge.

- Explain the scientific basis/concept for your proposed solution.

- Discuss the potential benefits and drawbacks of it.

- Cite at least 3 expert sources that support the concept/rationale behind solution.

Assignment 5: Public awareness campaign

Identify your target audience (e.g., residents, businesses, schools, local government) and develop a public awareness campaign to educate them about the environmental challenge you are investigating.

Create a simple PR campaign that includes:

- Social media content: Create 3-5 social media posts (images, short videos, infographics) that educate your audience about the challenge, its impact, and how they can help.

- Community event: Plan a community event (e.g., workshop, fundraiser, cleanup activity, etc.)

- Provide a bulleted list of:

- Event purpose and goals

- Where the event will take place

- What is expected of attendees (e.g., clean up, recruit others, build items, collect information, etc.)

- How you will promote the event

- Provide a bulleted list of:

- Local media outreach: Identify local media contacts you would reach out to (e.g., specific journalists and/or news agencies, etc.)

- Describe how you would contact them and provide what you would say.

- e.g., If you choose to email a journalist, provide the email text you would provide. If you would call them, how would you introduce yourself and describe the challenge whether they answer or if you leave a voicemail?

- Describe how you would contact them and provide what you would say.

Deliverable: A campaign portfolio that includes your social media content, a detailed plan for your community outreach event, and a draft of your press release or letter to the editor. Include a written justification (500 words) of your campaign strategy.

Assignment 6: Present your findings

Create a video presentation about your research findings and proposed solution to a specific audience (e.g., community members, policymakers, scientists).

- Explain the scientific concepts clearly.

- Use supplemental visuals.

- Explain why you chose the solution.

Deliverable: A presentation and a brief written justification for your communication strategy.

Part 5: AI Policies & Training

What to cover in your AI policies and training for students and faculty.

Main Menu

AI policies & training

Before we dive into this section, it’s important to note that AI policies help to to formally establish guidelines and expectations about AI use. But, it’s equally important to be clear that AI policies won’t stop students from using AI to cheat.

Referring back to the findings from the 2025 survey of more than 1,000 students? 76% said their institution has clear AI policies, yet 88% use AI on exams. You could argue that maybe their instructor allowed it (the report didn’t clarify), but realistically and logically, that’s unlikely, when most students said that they use AI to explain concepts.

Overall, though, higher education institutions are mostly supportive of integrating generative AI when it’s done responsibly and cautiously; and many provide guidelines for specific roles and communication channels to share those expectations with stakeholders (An et al., 2024; Jin et al., 2024; McDonald et al., 2024).

The study by An et al. (2024) analyzed over 200 AI policy-related documents from 50 R1 universities in the US. These policies were either institution-wide or specific to faculty, students, researchers, or administrators. The guidelines for faculty and administrators were highly positive, while those for students and researchers had a cautious tone focused on misuse of AI, limitations, and academic integrity.

The most common topics address in these documents:

- AI integration in learning and assessment

- What the tools are and how they can be used to create different types of content

- Limitations like ethical and security issues

- Academic integrity

Some of the general recommendations from the study are reflected in the tips below.

AI policy tips

Include a syllabus statement

Clearly define if and how students can use different generative AI tools (not just chatbots), and whether their use is fully allowed, allowed with limitations, or not allowed at all.

Create your policy and communicate it

Establish your own course-specific rules for GenAI use and communicate them to students through email and post them in different areas of the LMS, like course announcements, discussions, etc.

Rethink your assignments and assessments

Consider redesigning assignments and assessments to emphasize real-world tasks, iterative work, and higher-order thinking.

Understand AI and how it works

Familiarize yourself with different AI tools, what they can do, their limitations, and how they can impact teaching and learning.

Don’t rely solely on AI detection tools

An et al. found that 19 of the 50 universities advised against using AI detection tools due to concerns about inaccuracy and bias, while others suggested they can be used with caution as part of a broader review process rather than as the sole source of truth.

Use AI as a tool, not a replacement

Many of the institutions that recommended incorporating AI into teaching emphasized using it as a tool to support instruction, not as a replacement for the instructor. The guidelines encouraged thoughtful integration that challenges students to think critically about the learning materials.

The University of Florida recommends three approaches that may help address AI use by defining the expectations and tools students can use in assignments and when it’s appropriate to use them:

1. AI-Permitted: Generative AI tools may be required in this course. Generative AI use is promoted in some assignments and will be clarified in assignment instructions. Any work that is done using generative AI must be cited in your submission.

2. Some AI: Generative AI tools may be used to enhance some assignments in this course. Assignment instructions will differentiate between distinct human and AI tasks. Any work that is done using generative AI must be cited in your submission.

3. No AI: The learning that takes place in this course requires your unique perspective and human experience. Use of AI would make it harder to evaluate your work. It is not permitted to use any generative AI tools in this course, and the use of AI will be treated as an academic integrity issue.

Follow academic integrity policies

Unauthorized use of generative AI tools can be considered cheating or plagiarism. However, if your instructor allows AI use, make sure you follow their guidelines and cite it properly.

Check each instructors’ policies

Since each instructor and course may have different policies, it’s important to read the syllabus and ask questions before using any AI (including tools like Grammarly). Some courses may allow limited use with proper citation, while others may not permit it at all. When in doubt, check with your instructor.

Know the limitations and always fact-check

Always fact-check what chatbots generate for you, which can be inaccurate, biased, or even completely made-up.

AI training tips

Potential topics to cover during faculty training:

- Break down how AI works: Help faculty understand what AI can and can’t do by explaining it in simple terms. Focus on the basics like its strengths, limitations, and how it actually generates responses.

- Prompting tips: While prompting is pretty simple overall, sharing example prompts and general tips can help faculty understand how it works and maybe even spark some new ideas for how they use AI in their courses.

- Show real examples in teaching: Demonstrate how AI can be used in different teaching situations like for writing/rewriting test questions, adapting content for different uses (e.g., creating study guides based on PPT text). Let them try it out and/or use case studies to see how it fits into real classroom settings.

- Help design/redesign assessments and assignments: Work with faculty to build assignments that include AI in a clear and meaningful way. Tools like the TILT framework can help make the purpose and expectations clear to students.

- Discuss AI limitations and risks: Cover topics like privacy concerns, potential biases, and the importance of using AI ethically and responsibly.

- Teach faculty how to talk to students about AI: Help faculty explain how AI works, where it can be useful in coursework, and what its limitations are. They should explain and give examples of what counts as appropriate use, when AI is or isn’t allowed, how to cite it, and what would be considered misconduct.

Students want training and support on all things AI—what it is, where it gets the information from, and how to use it during their course work (Deschenes & McMahon, 2024).

Here are a few topics to cover with students:

- How to use incorporate it into their course work

- Guidance on how to create the right prompts

- Information on how chatbots work

- Include details on limitations such as inaccurate information, biases, and privacy.

- Academic integrity

- Students prefer specific guidelines on AI use (Hamerman et al., 2024). So, thoroughly explain what’s considered academic dishonesty and talk through situations to help them understand what’s allowed or not.

Part 6: Starting AI initatives

Considerations for institutions starting AI initiatives.

Main Menu

Thinking about starting or expanding your AI initiatives? It’s a big effort with a lot of moving parts and people. It takes time, resources, and long-term commitment, but the impact is well worth it.

Why AI is worth it

Whether your institution is looking to integrate AI across all your courses or just starting small, here are a few good reasons to start:

- Recruitment and retention: Offering AI education and access to AI tools helps recruit and retain students and faculty (especially those who conduct research).

- Committed and competitive: Integrating AI into teaching, learning, and research helps students and faculty build the skills they need to keep up, and it shows the institution is committed to staying ahead and focused on the future.

- Supports workforce and community needs: AI initiatives can build support from all sorts of stakeholders like industry partners, donors, and policymakers, while helping prepare students to use AI effectively in their future work.

Who's involved?

AI initiatives need a coordinated, diverse team of expertise across academic, technical, and administrative areas. Each institution is different, so titles and responsibilities may vary.

In most cases, such as champions of the initiative, one person may take on multiple roles. That said, here’s a quick list of roles and responsibilities that’ll likely be involved:

Champion(s)

Champions are the ones who get the ball rolling, keep things moving, and makes sure everyone’s on board and aligned. This person is usually involved in multiple areas.

Administrative leadership

Administrative leadership may include:

- President: Supports the initiative publicly and helps secure buy-in from trustees, donors, and external leaders.

- Provost: Leads and oversees academic integration of AI and guides the approval of new AI-related curriculum.

- Deans: Helps lead AI efforts within their colleges and keep faculty informed and involved.

Technology leadership

Depending on the institution, one or all of these roles may be involved to implement the technologies and systems and keep everything secure.

CIOs and CTOs play key roles in starting and maintaining AI initiatives by managing the systems, tools, and support needed to run AI across the institution. The Chief Information Security Officer (CISO) may also be involved, focusing on protecting data, reducing risk, and making sure AI tools meet privacy and security standards.

Funds & funding

- Chief Financial Officer: Oversees financial planning, budgeting, and cost management for the AI initiative, and supports long-term sustainability by aligning funding sources with institutional priorities.

- VP Advancement: Engages alumni and donors to support the AI initiative financially, positioning it as a transformative opportunity for the university.

Other considerations

- Accessibility compliance: While several roles we’ve mentioned may contribute, dedicated involvement from an Accessibility & Compliance Officer or similar roles helps support all learners and confirm that accessibility compliance standards like ADA and FERPA are met.

- Government affairs: Builds and maintains connections with state and federal leaders, as well as industry partners to attract funding and participate in larger industry initiatives/goals for AI in education.

- Communications and marketing: Raises awareness and interest, creates understanding, and builds support. This includes internal messaging to students, faculty, and staff, as well as external outreach to policymakers, donors, and the broader community.

Getting buy-in

You need students, faculty, and staff on board with any AI initiative. Students are usually quick to adopt new technologies, especially when they know it’ll impact them in the future. Getting buy-in from faculty and staff can be trickier. Some may not understand the purpose. Some may not like it. Some simply might not be interested.

But regardless of where they are on the spectrum, there’s a pretty good chance that some are already using AI in their work, even if they don’t necessarily consider it AI. For example, Siri and Alexa are conversational AI, and even tools you might not think of, like Microsoft Word and Grammarly, have AI built in.

The point is that those small wins—we use wins loosely—can be a starting point that can eventually lead to surveys, focus groups, and small-scale workshops and faculty training and development that could generate some interest and enthusiasm. As interest grows, try hosting in-person and/or virtual events where students, faculty, and staff can learn about AI, get their questions answered, and test out some of the tools.

References

Aad, S., & Hardey, M. (2025). Generative AI: Hopes, controversies and the future of faculty roles in education. Quality Assurance in Education, 33(2), 267–282. https://doi.org/10.1108/QAE-02-2024-0043

Abbas, M., Jam, F. A., & Khan, T. I. (2024). Is it harmful or helpful? Examining the causes and consequences of generative AI usage among university students. International Journal of Educational Technology in Higher Education, 21(1), 10–22. https://doi.org/10.1186/s41239-024-00444-7

An, Y., Yu, J. H., & James, S. (2025). Investigating the higher education institutions’ guidelines and policies regarding the use of generative AI in teaching, learning, research, and administration. International Journal of Educational Technology in Higher Education, 22(1), 10–23. https://doi.org/10.1186/s41239-025-00507-3

Bhutoria, A. (2022). Personalized education and Artificial Intelligence in the United States, China, and India: A systematic review using a Human-In-The-Loop model. Computers and Education: Artificial Intelligence, 3, 100068.

Chang, D. H., Lin, M. P.-C., Hajian, S., & Wang, Q. Q. (2023). Educational design principles of using ai chatbot that supports self-regulated learning in education: Goal setting, feedback, and personalization. Sustainability, 15(17), 12921-. https://doi.org/10.3390/su151712921

Chapman, E., Zhao, J., & Sabet, P. G. P. (2024). Generative artificial intelligence and assessment task design: Getting back to basics through the lens of the AARDVARC Model. Education, Research and Perspectives, 51, 1–36.

Creely, E. (2024). Exploring the role of generative ai in enhancing language learning: opportunities and challenges. International Journal of Changes in Education, 1(3), Article 3. https://doi.org/10.47852/bonviewIJCE42022495

Dai, W., Lin, J., Jin, H., Li, T., Tsai, Y.-S., Gašević, D., & Chen, G. (2023). Can large language models provide feedback to students? A case study on ChatGPT. 2023 IEEE International Conference on Advanced Learning Technologies (ICALT), 323–325. https://doi.org/10.1109/ICALT58122.2023.00100

Deng, X., & Yu, Z. (2023). A meta-analysis and systematic review of the effect of chatbot technology use in sustainable education. Sustainability, 15(4), Article 4. https://doi.org/10.3390/su15042940

Deschenes, A., & McMahon, M. (2024). A survey on student use of generative ai chatbots for academic research. Evidence Based Library and Information Practice, 19(2), Article 2. https://doi.org/10.18438/eblip30512

Dwivedi, Y. K., Kshetri, N., Hughes, L., Slade, E. L., Jeyaraj, A., Kar, A. K., Baabdullah, A. M., Koohang, A., Raghavan, V., Ahuja, M., Albanna, H., Albashrawi, M. A., Al-Busaidi, A. S., Balakrishnan, J., Barlette, Y., Basu, S., Bose, I., Brooks, L., Buhalis, D., … Wright, R. (2023). Opinion paper: “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. International Journal of Information Management, 71, 102642. https://doi.org/10.1016/j.ijinfomgt.2023.102642

Evmenova, A. S., Borup, J., & Shin, J. K. (2024). Harnessing the power of generative ai to support all learners. TechTrends, 68(4), 820–831. https://doi.org/10.1007/s11528-024-00966-x

Gruenhagen, J. H., Sinclair, P. M., Carroll, J.-A., Baker, P. R. A., Wilson, A., & Demant, D. (2024). The rapid rise of generative AI and its implications for academic integrity: Students’ perceptions and use of chatbots for assistance with assessments. Computers and Education: Artificial Intelligence, 7, 100273. https://doi.org/10.1016/j.caeai.2024.100273

Hasebrook, J. P., Michalak, L., Kohnen, D., Metelmann, B., Metelmann, C., Brinkrolf, P., Flessa, S., & Hahnenkamp, K. (2023). Digital transition in rural emergency medicine: Impact of job satisfaction and workload on communication and technology acceptance. PLOS ONE, 18(1), e0280956. https://doi.org/10.1371/journal.pone.0280956

Imundo, M. N., Watanabe, M., Potter, A. H., Gong, J., Arner, T., & McNamara, D. S. (2024). Expert thinking with generative chatbots. Journal of Applied Research in Memory and Cognition, 13(4), 465–484. https://doi.org/10.1037/mac0000199

Jin, Y., Yan, L., Echeverria, V., Gašević, D., Martinez-Maldonado, R. (2024). Generative AI in higher education: A global perspective of institutional adoption policies and guidelines. arXiv:2405.11800v1. https://doi.org/10.48550/arXiv. 2405.11800

Klos, M. C., Escoredo, M., Joerin, A., Lemos, V. N., Rauws, M., & Bunge, E. L. (2021). Artificial intelligence–based chatbot for anxiety and depression in university students: Pilot randomized controlled trial. JMIR Formative Research, 5(8), e20678. https://doi.org/10.2196/20678

Kofinas, A. K., Tsay, C. H.-H., & Pike, D. (2025). The impact of generative AI on academic integrity of authentic assessments within a higher education context. British Journal of Educational Technology. https://doi.org/10.1111/bjet.13585

Koudela-Hamila, S., Santangelo, P. S., Ebner-Priemer, U. W., & Schlotz, W. (2022). Under which circumstances does academic workload lead to stress? Journal of Psychophysiology, 36(3), 188–197. https://doi.org/10.1027/0269-8803/ a000293

Labadze, L., Grigolia, M., & Machaidze, L. (2023). Role of AI chatbots in education: Systematic literature review. International Journal of Educational Technology in Higher Education, 20(1), 56. https://doi.org/10.1186/s41239-023-00426-1

Liu, J. Q. J., Hui, K. T. K., Al Zoubi, F., Zhou, Z. Z. X., Samartzis, D., Yu, C. C. H., Chang, J. R., & Wong, A. Y. L. (2024). The great detectives: Humans versus AI detectors in catching large language model-generated medical writing. International Journal for Educational Integrity, 20(1), 8–14. https://doi.org/10.1007/s40979-024-00155-6

Liu, L., Subbareddy, R., & Raghavendra, C. G. (2022). AI Intelligence Chatbot to Improve Students Learning in the Higher Education Platform. Journal of Interconnection Networks, 22(Supp02), 2143032. https://doi.org/10.1142/S0219265921430325

Liu, M., & Reinders, H. (2025). Do AI chatbots impact motivation? Insights from a preliminary longitudinal study. System (Linköping), 128, 103544-. https://doi.org/10.1016/j.system.2024.103544

Mai, D. T. T., Da, C. V., & Hanh, N. V. (2024). The use of ChatGPT in teaching and learning: A systematic review through SWOT analysis approach. Frontiers in Education, 9. https://doi.org/10.3389/feduc.2024.1328769

McDonald, N., Johri, A., Ali, A., & Hingle, A. (2024). Generative artificial intelligence in higher education: Evidence from an analysis of institutional policies and guidelines. arXiv:2402.01659.

Neumann, A. T., Arndt, T., Köbis, L., Meissner, R., Martin, A., de Lange, P., Pengel, N., Klamma, R., & Wollersheim, H.-W. (2021). Chatbots as a Tool to Scale Mentoring Processes: Individually Supporting Self-Study in Higher Education. Frontiers in Artificial Intelligence, 4. https://doi.org/10.3389/frai.2021.668220

Nguyen Thanh, B., Vo, D. T. H., Nguyen Nhat, M., Pham, T. T. T., Thai Trung, H., & Ha Xuan, S. (2023). Race with the machines: Assessing the capability of generative AI in solving authentic assessments. Australasian Journal of Educational Technology, 39(5), 59–81. https://doi.org/10.14742/ajet.8902

Pellas, N. (2025). The role of students’ higher-order thinking skills in the relationship between academic achievements and machine learning using generative AI chatbots. Research and Practice in Technology Enhanced Learning, 20, 36-. https://doi.org/10.58459/rptel.2025.20036

Pergantis, P., Bamicha, V., Skianis, C., & Drigas, A. (2025). AI Chatbots and Cognitive Control: Enhancing Executive Functions Through Chatbot Interactions: A Systematic Review. Brain Sciences, 15(1), 47-. https://doi.org/10.3390/brainsci15010047

Sánchez-Vera, F. (2025). Subject-specialized chatbot in higher education as a tutor for autonomous exam preparation: Analysis of the impact on academic performance and students’ perception of its usefulness. Education Sciences, 15(1), 26-. https://doi.org/10.3390/educsci15010026

Schei, O. M., Møgelvang, A., & Ludvigsen, K. (2024). Perceptions and Use of AI Chatbots among Students in Higher Education: A Scoping Review of Empirical Studies. Education Sciences, 14(8), 922-. https://doi.org/10.3390/educsci14080922

Shankar, S. K., Pothancheri, G., Sasi, D., & Mishra, S. (2025). Bringing Teachers in the Loop: Exploring Perspectives on Integrating Generative AI in Technology-Enhanced Learning. International Journal of Artificial Intelligence in Education, 35(1), 155–180. https://doi.org/10.1007/s40593-024-00428-8

Song, C., & Song, Y. (2023). Enhancing academic writing skills and motivation: Assessing the efficacy of ChatGPT in AI-assisted language learning for EFL students. Frontiers in Psychology, 14. https://doi.org/10.3389/fpsyg.2023.1260843

Stojanov, A., Liu, Q., & Koh, J. H. L. (2024). University students’ self-reported reliance on ChatGPT for learning: A latent profile analysis. Computers and Education: Artificial Intelligence, 6, 100243. https://doi.org/10.1016/j.caeai.2024.100243

Susnjak, T., & McIntosh, T. (2024). ChatGPT: The end of online exam integrity? Education Sciences, 14(6), 656-. https://doi.org/10.3390/educsci14060656

Walter, Y. (2024). Embracing the future of Artificial Intelligence in the classroom: The relevance of AI literacy, prompt engineering, and critical thinking in modern education. International Journal of Educational Technology in Higher Education, 21(1), 15. https://doi.org/10.1186/s41239-024-00448-3

Wach, K., Duong, C. D., Ejdys, J., Kazlauskaitė, R., Korzynski, P., Mazurek, G., Paliszkiewicz, J., & Ziemba, E. (2023). The dark side of generative artificial intelligence: A critical analysis of controversies and risks of ChatGPT. Entrepreneurial Business and Economics Review, 11(2), Article 2. https://doi.org/10.15678/EBER.2023.110201

Weber-Wulff, D., Anohina-Naumeca, A., Bjelobaba, S., Foltýnek, T., Guerrero-Dib, J., Popoola, O., Šigut, P., & Waddington, L. (2023). Testing of detection tools for AI-generated text. International Journal for Educational Integrity, 19(1), 26–39. https://doi.org/10.1007/s40979-023-00146-z

Wood, D., & Moss, S. H. (2024). Evaluating the impact of students’ generative AI use in educational contexts. Journal of Research in Innovative Teaching & Learning, 17(2), 152–167. https://doi.org/10.1108/JRIT-06-2024-0151

Wu, R., & Yu, Z. (2024). Do AI chatbots improve students learning outcomes? Evidence from a meta-analysis. British Journal of Educational Technology, 55(1), 10–33. https://doi.org/10.1111/bjet.13334

Zhong, T., Zhu, G., Hou, C., Wang, Y., & Fan, X. (2024). The influences of ChatGPT on undergraduate students’ demonstrated and perceived interdisciplinary learning. Education and Information Technologies, 29(17), 23577–23603. https://doi.org/10.1007/s10639-024-12787-9