AI isn’t new to online learning (or in-person). Educators use it to automate tasks that eat up time, analyze data, and even customize learning paths. While some of the AI tools you’ll see aren’t widely used in online education (yet), they could—and probably should—be soon.

But keep this in mind: AI can help educators create equitable learning environments and accessible online courses, but it can’t replace educators.

Educators have used AI in online courses for yeeeeeears to automate tasks, analyze data, and tailor learning paths. While most of the AI tools you’ll see in this article aren’t widely used in online learning, they could—and probably should—be soon.

But keep this in mind: AI can help educators create equitable learning environments and accessible online courses, but it can’t replace educators.

AI to improve equity & accessibility in online courses

AI to make online courses more accessible & equitable

Emotion AI

Emotion AI analyzes our behavior, how we speak, and what we write, then associates these with specific emotions. There are three primary types of emotion AI: video, text, and voice.

Video Emotion AI

Analyzes body language, facial expressions, gestures, and other movements to determine emotional states.

Text Emotion AI

Identifies emotions based on the tone, word choices, patterns, and other aspects of written text.

Voice Emotion AI

Listens to vocal characteristics like volume, pitch, and pace to determine emotional states.

Considerations before using emotion AI

Emotions are complex, and how we express them varies, so keep these in mind to help use them effectively and ethically:

- Each person expresses emotions differently.

- Physical conditions or disabilities may limit facial expressions or cause unintended body movements, which could give inaccurate feedback on emotional states.

- Cultural differences can impact behavior, gestures, speech, etc.

- For example, eye contact is a sign of interest and respect in some cultures, while other cultures avoid eye contact to show respect.

Considerations before using emotion AI

Emotions are complex and how we express them varies, so keep these in mind to help use it effectively & ethically:

Each person expresses emotions differently.

Physical conditions or disabilities may limit facial expressions or cause unintended body movements, which could give inaccurate feedback on emotional states.

Cultural differences can impact behavior, gestures, speech, etc.

- e.g., eye contact is a sign of respect in some cultures while others avoid eye contact to show respect.

Video Emotion AI

Video emotion AI uses a combination of technologies to analyze and understand facial expressions and body language, like posture, gestures, and eye contact.

- Computer vision AI helps computers see the world as humans do by analyzing and interpreting the environment and objects around them.

- Facial expression recognition detects facial expressions and microexpressions by analyzing facial landmarks.

- Body language and gesture recognition identify emotions based on body movements and gestures. For example, touching the face can indicate stress, and tilting the head can indicate confusion.

How to use video emotion AI in online courses:

- Understand and accommodate nonverbal communication: helps those with conditions and disabilities communicate through gestures, for example.

- Measure engagement: detects signs of disinterest and confusion, for example, which can allow educators to tailor course activities in real time.

- Nonverbal communication: helps those with specific conditions and disabilities communicate through gestures, for example.

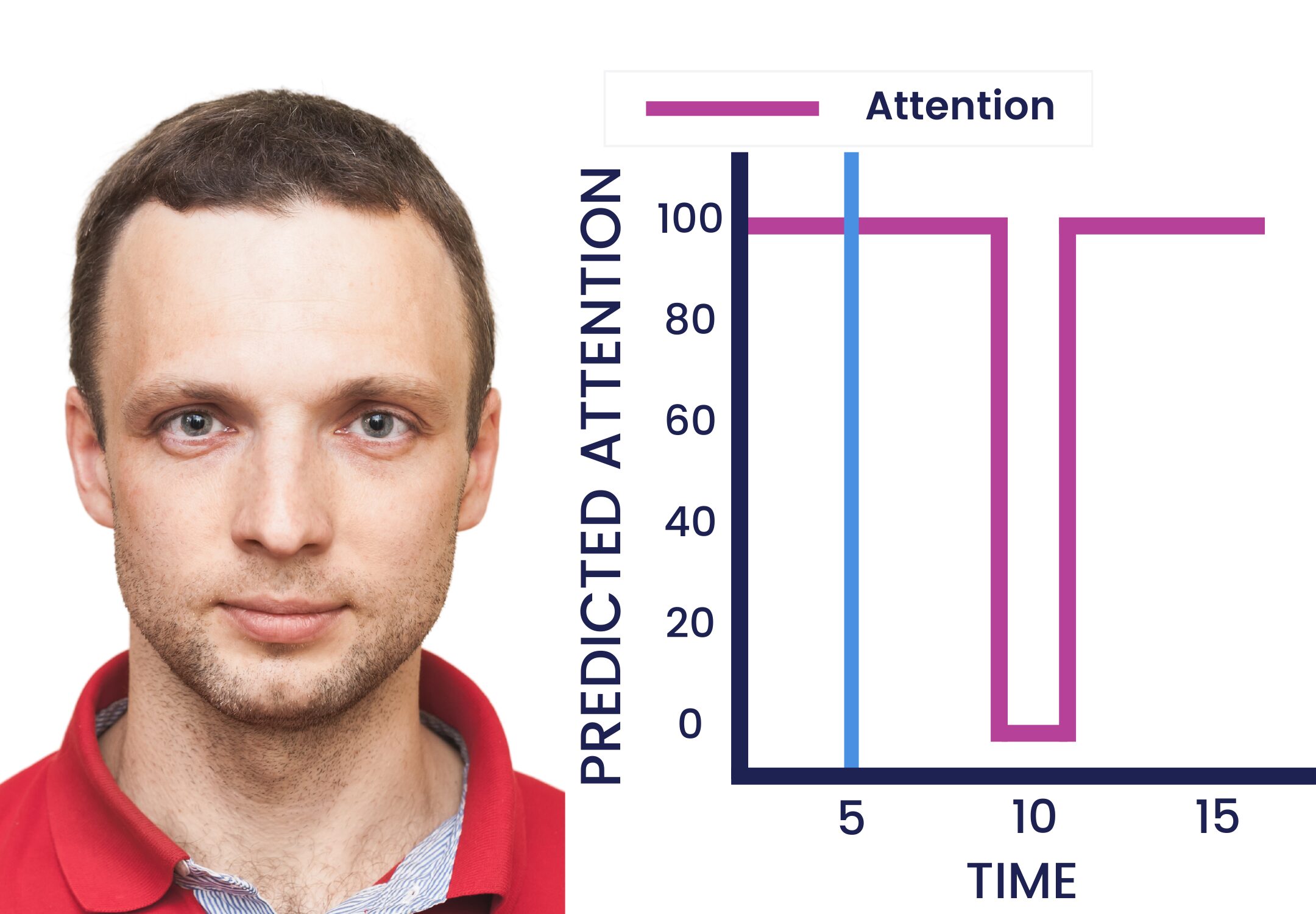

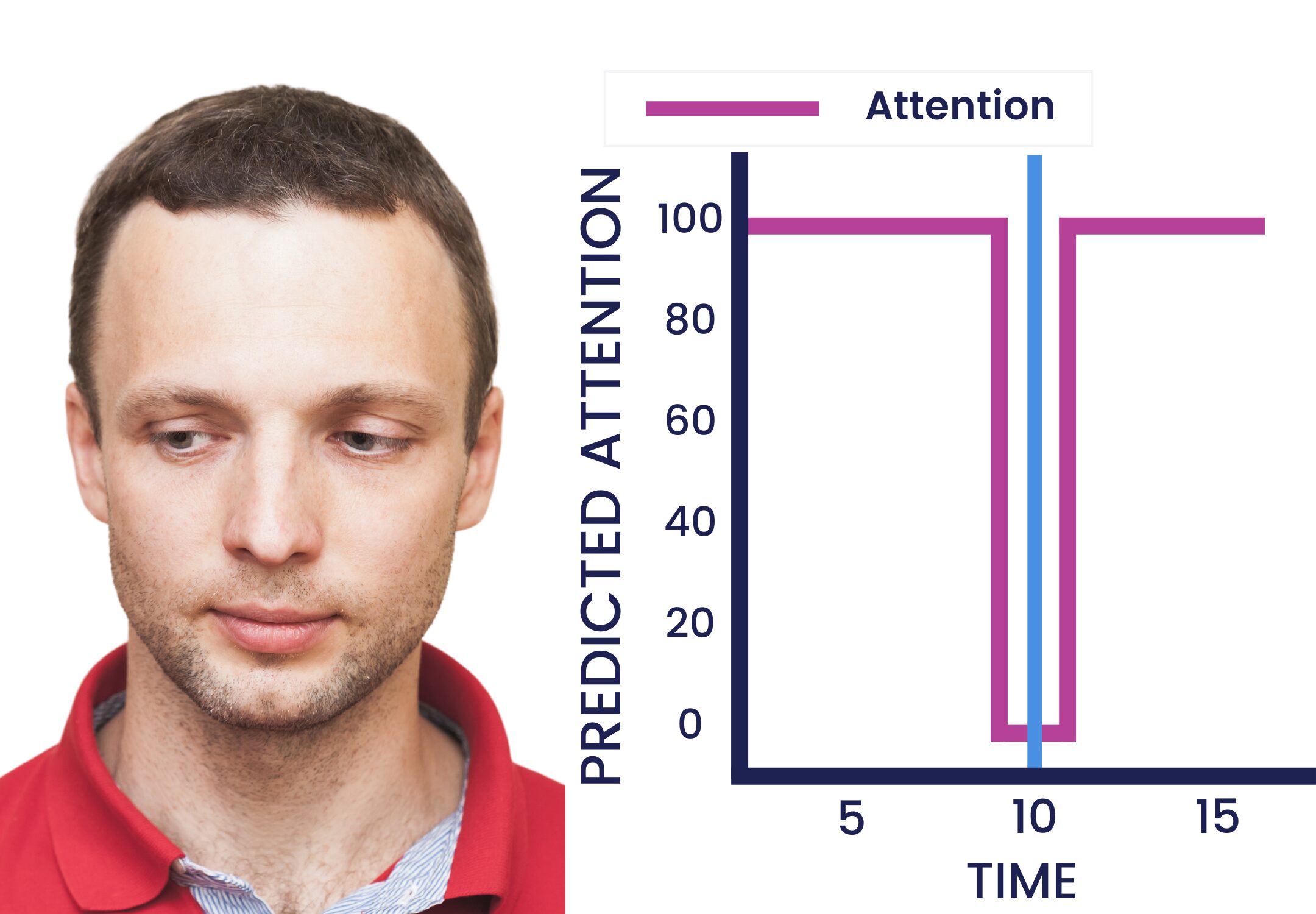

The example images below show how video emotion AI may appear to instructors using it to understand learners’ attention levels during specific course activities.

Attention is high while making eye contact, but it decreases when they look away.

High Attention

Low Attention

High Attention

Low Attention

Looking away, especially from a computer screen, doesn’t mean they aren’t paying attention. And looking at the computer screen doesn’t mean they’re paying attention (or interested).

But considering that information with other aspects, like body language, facial expressions, and how long they looked away, can help instructors begin to understand emotions to a certain extent.

Instructors can use these insights to identify engaging topics and content formats, areas to revamp, and where learners are struggling.

Text Emotion AI

Text emotion AI analyzes text to determine emotions based on two primary approaches: lexicon (our vocabulary) and machine learning.

- Lexicon-based: matches the text to a database of keywords and phrases associated with specific emotions.

- Machine learning: compares the text to databases of text that it was trained on and continually learns from to recognize patterns and context. This goes beyond simple keyword matching, as seen in the lexicon-based approach.

How to use text emotion AI in online courses:

- Identifying where learners are struggling: detecting signs of confusion or frustration in written submissions, like discussion responses, can help provide timely support and course materials that address learners’ needs.

- Personalizing feedback: understanding the emotional context of learners’ writing can help educators provide more personalized, empathetic, and supportive feedback in some cases.

- Understanding course feedback in-depth: analyzing learners’ course feedback can provide a deeper understanding of their learning experiences and feelings about the course, which can be used to refine course content and activities.

Voice Emotion AI

Voice emotion AI listens to vocal characteristics—very carefully!—to understand emotions.

Vocal characteristics can include how fast and loud you speak, speech patterns, enunciation, inflection, etc.

Business use cases can translate to online courses

Companies use voice emotion AI to provide customer service employees with real-time insights that indicate when customers are growing frustrated, confused, and angry. If integrated and used appropriately, it can offer educators similar insights about learners in their courses.

Business use cases can translate to online courses

Companies use voice emotion AI to give real-time insights to customer service staff about when customers or learners are becoming frustrated, confused, or angry.

How to use voice emotion AI in online courses:

- Presentation feedback: providing learners with in-depth feedback on their presentations based on pacing, volume, pitch, and other vocal qualities.

- Adapt course activities based on interest: analyzing vocal characteristics such as tone and intensity can help gauge interest levels in real-time during a lecture, allowing instructors to switch to more interactive activities if disinterest is detected to re-engage learners.

- Identify signs of stress and anxiety: detecting stress and anxiety through vocal characteristics allows educators to immediately intervene and offer support.

Challenges of using voice emotion AI

- Subjectivity: interpreting speech is subjective, and it can be difficult to accurately identify emotions. For example, yelling is a sign of anger, but it can also express excitement.

- Slang: recognizing and interpreting slang words and phrases is difficult if the AI hasn’t been trained on them.

- Dialect and accents: the accuracy of voice emotion AI can be impacted by dialects and accents because they change speech patterns, pronunciation, and even the meaning of words in the same language.

These challenges highlight the importance of training voice emotion AI on diverse datasets that include a wide range of accents, dialects, and cultural speech patterns. This training helps the system become more adaptable and accurate in interpreting emotions from speech with different accents.

Part 1: AI Tools for DEI

Part 2: AI to Develop Equitable Admissions

Part 3: How to Build Accessible Online Courses

AI for Tutoring & Support

Intelligent Tutoring Systems (ITS)

ITS use AI to replicate human tutoring by providing learners with immediate feedback and content adapted to their needs—whether they’re struggling or excelling in a topic—based on their performance, learning styles, and even their preferences. In other words, ITS meet learners where they are in the learning process and give them what they need, when they need it.

They help learners incrementally digest complex course content by breaking information in smaller chunks, offering hints, and recommending extra content in formats that benefit them the most.

Here’s how an ITS could work for an algebra course (scroll below)

Here’s how an ITS could work for an algebra course

- If the learner struggles in a specific area, the ITS provides extra help, like step-by-step guides & practice problems.

- If the learner excels in a topic, the ITS gradually progresses to more advanced concepts & activities.

Assessing knowledge

Customizing learning

Struggling?

Excelling?

Providing immediate feedback

Chatbots

Chatbots are great for interactive tutoring, helping to break down complex subjects, provide instant answers and feedback, brainstorming, and more. The only caveat is that chatbots can provide incorrect information sometimes, so learners need to doublecheck the information they receive during these activities.

Chatbots can help with tutoring needs such as:

- Interactive learning activities: engaging learners with real-time activities like Q&A sessions; role-playing, such as real-time debates; and memorization activities, like flashcard drills.

- Instant feedback and response: providing feedback on a variety of assignments, such as long or short-form essays, and responding instantly to most questions.

- Language practice: helping improve language by practicing writing and conversing in real-time, building vocabulary, improving grammar, and translating content.

Chatbot cheating concerns?

ChatGPT and other generative AI chatbots are pretty controversial in education because they can answer most questions—accurately, for the most part—in an instant. Equally concerning is that they can write just about anything, and plagiarism and AI detection tools can’t catch them.

If these detection tools don’t work, how do you stop chatbot cheating?

Honorlock’s online proctoring platform has a several different tools that block and detect unauthorized AI tools during online exams and while they’re completing assignments, like writing essays.

AI Writing Assistants

Grammarly, QuillBot, and other AI writing assistants have evolved from spotting punctuation and spelling errors to suggesting improvements to writing style, clarity, organization, and tone.

They can also help boost confidence in people writing in a second language and individuals with learning disabilities by delivering feedback and corrections in different formats.

AI writing assistants help learns by:

- Bridging language gaps: helps learners with language barriers or learning disabilities polish their written work, ensuring their ideas are clearly articulated.

- Offering immediate feedback: checks writing instantly and provides real-time feedback in different formats.

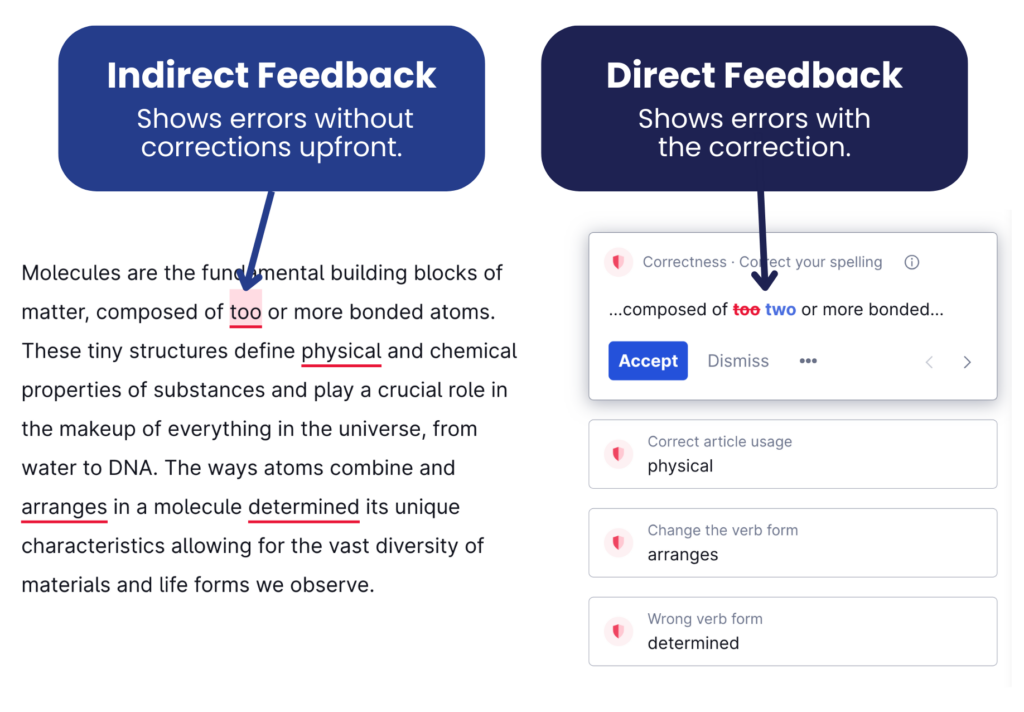

- Providing feedback in various formats: offers indirect feedback (underlines errors without corrections) and direct feedback (shows errors with corrections).

Platforms with AI assessment tools & features

The platforms in this sections aren’t necessarily considered AI, but they use AI features and integrate with other AI-powered platforms and tools that can make online assessments accessible and equitable.

Learning Management Systems

Learning Management Systems (LMS) are the bread and butter of online learning; they’re a central location where online courses operate out of and where other tools plug into.

When used to the fullest, the LMS offers the ability to assess knowledge in different ways, customize settings and accommodate learners, and provide immediate scores.

Automated grading

Automated grading tools within the LMS save instructors time, but they also help support learners with intellectual disabilities1. The immediate feedback from automated grading can help these learners recognize correct answers, which reinforces what they’ve learned.

Accessible assessments

Most learning platforms follow or comply with web accessibility guidelines and requirements like Web Content Accessibility Guidelines (WCAG) and Section 508 which helps create accessible online learning environments and equitable access for all learners.

Part of that effort means supporting assistive technologies, which are crucial for test takers with disabilities, whether they are devices such as assistive keyboards or AI like voice dictation.

For example, the LMS integrates with speech-to-text AI, allowing test takers with physical disabilities to ‘write’ essays by speaking. The LMS also enables them to record video and audio responses, giving those with disabilities more ways to show what they know. their knowledge.

Remote Proctoring AI Tools

While remote proctoring prevents cheating to level the playing field, it also makes online assessments more convenient and flexible.

AI proctoring tools to prevent cheating

If test takers can use unauthorized resources to complete their assessments, exam integrity and equity are out the window.

Here are a few of Honorlock’s AI-based remote proctoring tools:

- Cell phone detection: detects phones and other devices so you don’t have to rely on a proctor seeing them and detects when test takers try to use them to look up answers.

- AI prevention: blocks and detects unauthorized use of AI tools like ChatGPT.

- Voice detection: listens for keywords (not unimportant sounds) that may indicate cheating, like “Hey Siri,” for test takers who may ask a voice assistant for answers.

- Secure browser: prevents test takers from accessing other browsers and using keyboard shortcuts and records their desktops.

- Content protection: automatically finds leaked test content on the web and gives admins a one-click option to send content removal requests.

While these proctoring features (and many more, like ID verification and video monitoring, etc.) monitor assessments, if they detect any potentially problematic behavior, they alert a live proctor to review the situation and intervene if necessary.

Hybrid proctoring offers more flexibility & convenience

No exam scheduling

Honorlock’s AI proctoring software, along with our live proctors and live support, are available 24/7/365, which means exams can be taken anytime at home or from other comfortable, convenient locations. No scheduling hassles or travel costs.

Minimal system requirements

Many exam proctoring services end the exam session when potential issues occur, like if a test taker in a rural area’s internet connection becomes unstable.

Honorlock, on the other hand, adjusts by capturing still images to compensate for slower internet connections, which allows test takers to complete their proctored exams without issue.

Reducing test anxiety

Honorlock’s hybrid proctoring (humans + AI) is less distracting & noninvasive

Honorlock’s AI-based remote proctoring tools monitor test takers and alert a live virtual proctor if it detects any behavior to review. Once alerted, the proctor can review the behavior in an analysis window, and they’ll only intervene if necessary to address the behavior.

This hybrid proctoring approach highlights the importance of using AI as a tool that always requires human oversight.

Preparing test takers

- Practice Exams: allows test takers to familiarize themselves with using Honorlock’s online proctoring platform before their real exams.

- HonorPrep guided tutorial: prepares test takers for their first proctored test with Honorlock with a system check, authentication walkthrough, and sample room scan.

- Honorlock Knowledge Base: provides in-depth information and detailed guides for using Honorlock’s proctoring platform.

AI for Languages & Culture

Translation tools and game-based platforms like Duolingo can help reduce language barriers. Still, they’re just the tip of the iceberg when it comes to language-related AI tools, which have many nuances, like localization and cultures.

Did you know?

Sign Language Interpretation AI

Sign language interpretation AI facilitates real-time translation of spoken language and text into sign language, and vice versa. This technology makes content accessible to deaf or hard-of hearing learners.

Recent advancements in sign language interpretation AI have led to the creation of realistic videos. The AI splices together videos and images of real people signing, moving away from cartoon-like avatars. This helps enhance clarity and comprehension, offering learners a more authentic and engaging educational experience.

Name Pronunciation AI

Unfortunately, many people have come to accept their name being mispronounced, and they answer to various mispronunciations. But they shouldn’t have to, especially when there’s name pronunciation AI that can help.

Here’s how it works:

AI name pronunciation tools integrate within the LMS, SIS, and most other platforms and common browsers.

Learners can voice-record their names or use audio databases for pronunciation. This allows instructors and peers to hear and see phonetic spellings of names.

Audio recordings of name pronunciations are available in many different areas across your platforms in areas like learner profiles and course rosters.

- Online class discussions

- Virtual welcome experiences

- Online program information sessions

- Tutoring sessions

- Student support

Accent Recognition AI

Does Siri or Alexa ever misunderstand you?

It may not happen that often if you're a native English speaker because AI voice assistants, depending on which one you're using, are between 85-95% accurate (some more, some less).

Does Siri or Alexa ever misunderstand you?

It may not happen that often if you’re a native English speaker because AI voice assistants, depending on which one you’re using, are between 85-95% accurate (some more, some less).

95% is pretty accurate, right?

Yep, but that still means every 20th word is incorrect, which is the same length of this sentence you’re reading.

But the accuracy of these tools decreases if you have an accent, even if you’re a native English speaker with a regional accent.

A study found that Americans with Southern and Western accents were more accurately understood by voice assistants compared to people with Eastern and Midwest accents.

But what about people in America with non-native accents?

The study showed a 30% higher rate of inaccuracies for individuals with non-native accents, especially those with Spanish and Chinese accents. This is particularly because Spanish and Chinese are the most common non-English languages in the U.S., per the Census Bureau data.

Imagine how frustrating this experience is for individuals for whom English is not their first language, and voice-related AI tools misunderstood a third of what was said. Now, imagine if English wasn’t the person’s first language and they rely on voice related AI because they have a disability that impacts their ability to type.

Did you know?

22% (69.2 million people) of the U.S. population speak languages other than English.

The good news is that accent recognition AI tools are available, specifically designed to recognize and better understand accents.

If these AI tools are integrated with users’ devices, it could improve the operability of voice-controlled technologies and generate more accurate live captioning and transcriptions. These tools analyze and interpret speech patterns, intonations, and pronunciations specific to different accents.

Localization AI

Even when people speak the same language, where they’re from can affect how well they are understood by others and by AI.

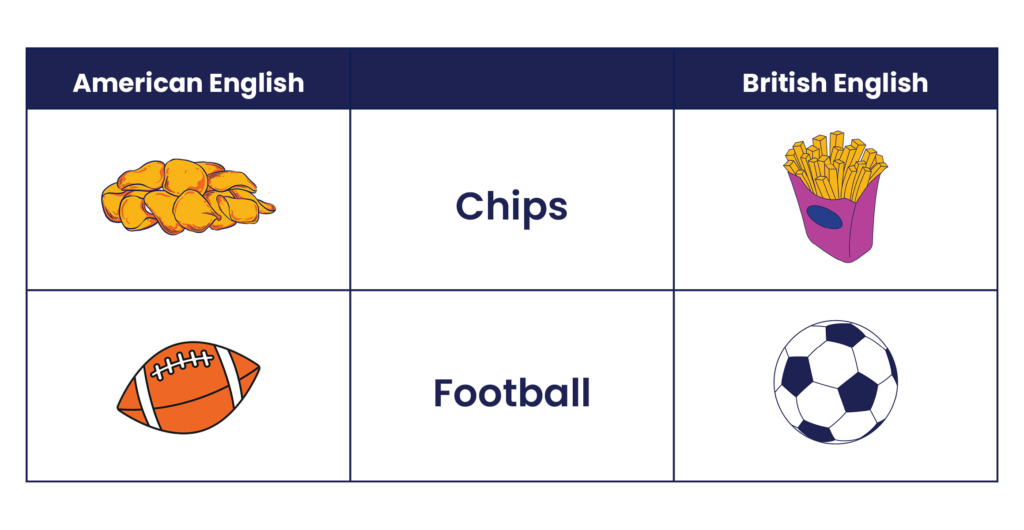

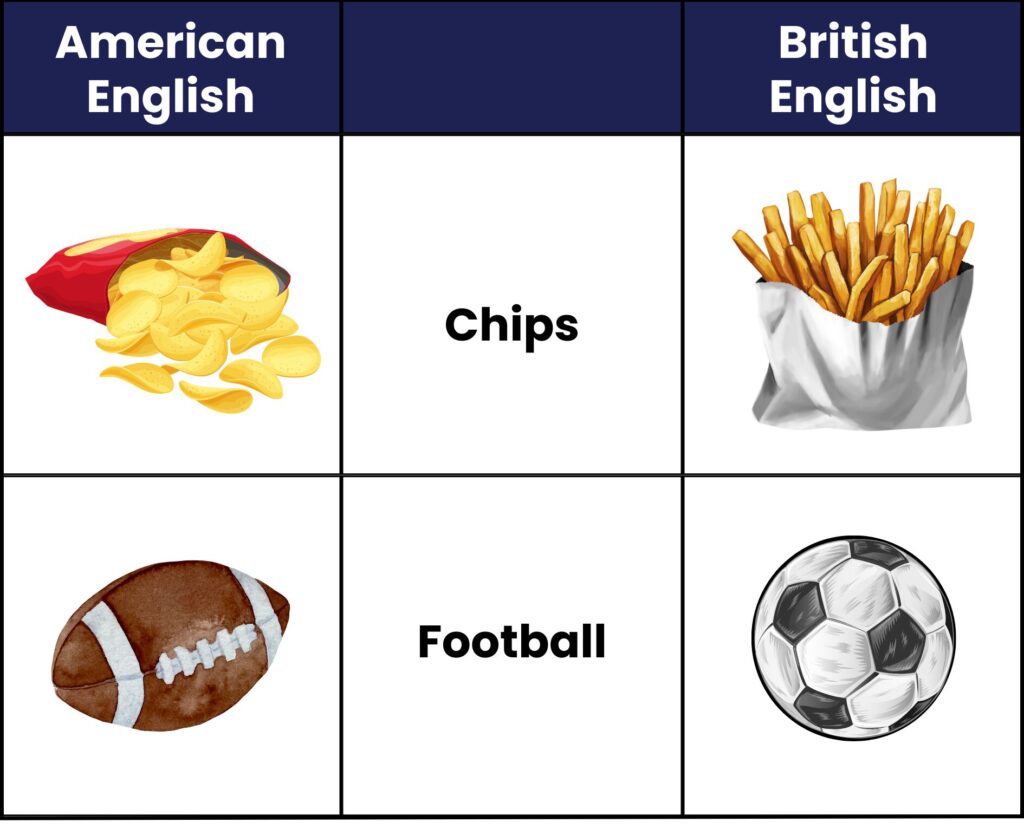

For instance, there are over 160 English dialects around the world, including American English, British English, and South African English, among others.

Typically, English speakers can understand one another despite their dialects, yet there are noticeable differences in pronunciation, grammar, and spelling.

Here’s how dialect can change the meaning of English words:

So, if a U.S.-based company sells “chips” in the U.K., their sales may struggle because people are confused and disappointed they didn’t get what they expected.

Similarly, learning content can be confusing if it isn’t localized. However, localization AI can automatically localize (or localising for the Brits) content for specific dialects and provide important context based on cultures and preferences.

Understanding AI before using it

Integrating AI in online courses starts with recognizing its limitations, such as biases and inaccuracies, and committing to ongoing efforts that ensure ethical, purposeful use of these with full transparency.

That means having a deep understanding of how the AI works, its training processes, the data it collects, how that data is protected, and open communication with learners about the purpose and how these tools tie to learning outcomes.

And the value of human oversight can’t be overstated here. Human oversight covers alllllll the nuances and complexities of different learners and situations are taken into consideration with other contextual factors that AI may overlook.

Sign up for weekly resources

1 Reynolds, T., Zupanick, C.E., & Dombeck, M. Effective Teaching Methods for People with Intellectual Disabilities.(2013).

2 Why don’t we believe non-native speakers? The influence of accent on credibility by Shiri Lev-Ari & Boaz Keysar, April 2010

3 Speaking up: accents and social mobility by Erez Levon, Devyani Sharma, Christian Ilbury. (2022, November).

4 Bias against languages other than English hurts students by Dr. Alba Ortiz. (2009)